Out of the box (e.g. a standard Hortonworks HDP 2.2 install), Hive does not come configured optimally to manage multiple users running queries simultaneously. This means it is possible for a single Hive query to use up all available Yarn memory, preventing other users from running a query simultaneously.

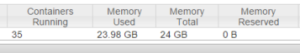

This high memory consumption can be observed via the resource manager HTTP management screen – e.g. http://<resourcemanagerIP>:8088/cluster

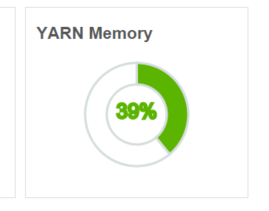

Also in Ambari…

Minimum queue memory per user

To guarantee the ability for more users to run Hive queries simultaneously (assuming capacity scheduler is used with default queue configuration), we can make a simple config settings change via Ambari:

Change from:

yarn.scheduler.capacity.root.default.user-limit-factor=1

To:

yarn.scheduler.capacity.root.default.user-limit-factor=0.33

This now means that each user of Hive will now receive a maximum of a third (or close to it) of Yarn memory resources.

This enables a better user experience for multi-user interactive querying of Hive – for example, by enabling 2-3 users to simultaneously use the cluster.

Another option

There is, however, one potential disadvantage to the above — namely cluster memory is potentially being wasted (by not being allocated) if the job queue contains only a single user’s jobs. A related parameter change can alleviate this – namely by setting:

yarn.scheduler.capacity.default.minimum-user-limit-percent=33

The “minimum user limit percent” means that each user is guaranteed a certain percentage of the yarn job queue’s memory if there is a mix of different users’ jobs waiting in the queue. In other words, 3 users will each get 33% of the queue memory for execution if their jobs are all waiting in the queue at the same time. If however, there is only one user with jobs waiting in the queue, his / her jobs will execute and consume all available memory in the queue. For User A this means a better use of memory overall, but possibly at the expense of User B who might return from their lunch break and must wait for one of User A’s jobs to finish before getting the guaranteed percentage memory allocation.

Finding the balance

The above, along with other parameters can be used to ensure users make the most of available cluster memory but do not effectively lock out other users by filling the queue with long running jobs.

For example – these settings allow a single user to use up to 90% of available yarn queue memory, and up to 4 users (each with 25%) to eventually be running in the cluster (the 5th, 6th, 7th users will have to wait for other users’ jobs to be fully completed):

yarn.scheduler.capacity.root.default.user-limit-factor=0.90

yarn.scheduler.capacity.default.minimum-user-limit-percent=25